principle of locality state that programs access a relatively small portion of their address space at any instant of time.

- Temporal locality (locality in time)

- spatial locality (locality in space)

if viewed from a space(2D) –time thread, temporal locality shows the inertia of the thread, and the spatial locality shows the small energy disturbance (additive disturbance ).

due to this nature of programs, and the different speed/size trade off off memory technology, we can mix different type of memory in a hierarchy into our memory system to form a more efficient non-uniform access time memory model for our programs.

the concept of cache is we speed up certain region of our memory map, and the region is a minimum granularity we accelerate by using the cache. it is often called block(line), since the blocks are sparely distributed in our memory map, when a block is not present in the cache, we have a cache miss, and the miss rate is the fraction of memory access not found in a level of the memory hierarchy.

the hit time is the time required to access a level of memory hierarchy (include the time to justify hit or miss), it’s normally much faster than the lower memory hierarchy.

the miss penalty is the time required to fetch a block into a level of the memory hierarchy from the lower level, including the time to access the block, transmit if from one level to another,insert it in the level that experienced the miss, and the pass the block to the requestor, the detail of the data transfer is hidden from the requestor, the requestor is only experiencing the extra latency but does not worry the data integrity.

direct mapped cache

a cache structure in which each memory location is mapped to exactly one location in the cache.

from the graph we can see, the cache simply partition the address into two part, the tag and index, the index is used to select a block and the tag is the information used to justify a hit or miss.

this directed mapped cache will lower the memory latency in a evenly distributed manner, but in run time, the actual lowered region is scattered.

the diagram shows a memory partition for direct mapped cache, low access time is possible if the location has a matched tag in a block of the cache.note that only one region is possible to cached in the block.

about the block size

here the granularity and sparsity is the key to the performance of the cache.the block size is the granularity, and bigger size will introduce the extra time for block replacement, some improvement can be used (but it also depend on the access pattern of programs, eg, instruction cache and date cache), such as early restart and request word first scheme.

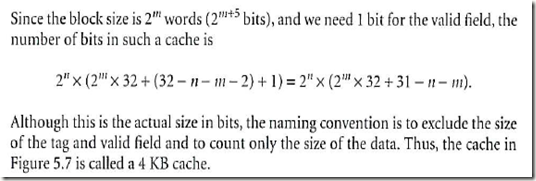

also the formula for calculating the cache recourse consumption.

Cache miss handle

the use of stall rather than interrupt when a miss occurred is to balance between the cost of

No comments:

Post a Comment